Have you ever stopped to consider how companies today are literally drowning in data? It's no exaggeration to say that we live in an age where information arrives from everywhere—internal systems, applications, sensors, social networks—and the question we ask ourselves is: how can we extract real value from so much scattered information?

Today, we live in a world where data is scattered across different systems, with each department using its own tools, creating veritable information silos. It's as if each sector of the company speaks a different language when it comes to data.

Microsoft Fabric emerges precisely to solve these problems that have long plagued managers and technical teams. The platform operates as Software as a Service, bringing together everything from data engineering to business intelligence into a single experience. We no longer need to jump between different tools to complete data work.

There's something interesting about this approach, called Lakehouse architecture. It takes the best of data lakes—the ability to store any type of data—and combines it with the processing power of data warehouses. It's like having the best of both worlds working together.

With PySpark integrated with Microsoft Fabric, we can perform data transformations, perform complex analyses, and even train machine learning models. All of this happens in a distributed framework that can handle large volumes of data without sacrificing performance.

The medallion architecture organizes data in a meaningful way: Bronze for raw data, Silver for processed data, and Gold for analysis-ready information. It ensures that data undergoes verification and cleaning steps until it reaches a reliable state for decision-making.

In this article, we'll explore how to implement these ideas in practice. From the initial setup to the best ways to optimize everything for scalability, the idea is to show how this approach can transform the way your company handles large volumes of data on a daily basis.

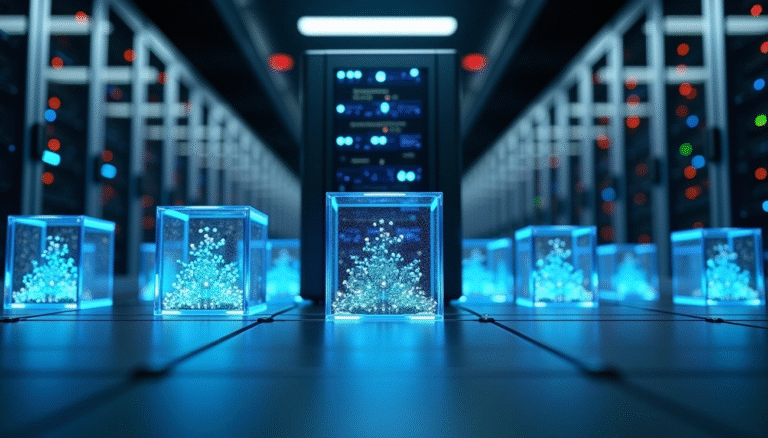

Microsoft Fabric Overview and the Lakehouse Concept

Image Source: James Serra's Blog

When we look at the current data landscape in enterprises, it's clear we need a different approach than what we've traditionally seen. Microsoft Fabric represents this shift in how we handle corporate information.

What is Microsoft Fabric and Why It Matters

Microsoft Fabric serves as a unified platform that brings together data engineering, data science, real-time intelligence, data warehousing, and databases into a SaaS solution [1]. The core idea is to enable organizations to transform complex data repositories into analytics that truly make a difference to the business [1].

The most compelling aspect of this platform is its ability to simplify the entire data lifecycle, from data arrival to insights generation [2]. We no longer need to manually integrate different tools—everything is centralized in a single environment [1]. Permissions and sensitivity labels are automatically inherited across all items, so we can focus on important work instead of managing infrastructure [1].

OneLake appears as a central component of this architecture, functioning as a unified data lake that facilitates data management and access [1]. This approach avoids data silos through a unified storage system that facilitates discovery, sharing, and policy enforcement [1].

Difference between Data Lake, Data Warehouse and Lakehouse

Data lakes were created to store large volumes of raw data in their original format while keeping costs low [3]. They can handle structured, semi-structured, and unstructured data without specific restrictions [4]. However, they present significant challenges – they are often disorganized and complex to build and manage [6].

Data warehouses follow a different philosophy: they aggregate, cleanse, and prepare data specifically for business intelligence and analytics [3]. They come with built-in analytics engines and reporting tools, while data lakes require external tools for processing [3].

Lakehouse emerges as a clever combination of these two approaches. It offers the data storage flexibility of a lake with the high-performance analytical capabilities of a warehouse [3]. It can store data in any format while maintaining low costs while supporting fast queries and optimized analytics [3].

How Lakehouse Solves Data Silos

Data silos pose a serious problem in organizations – they impede a complete view of information and directly affect leaders' ability to make data-driven decisions [6]. Different teams end up collecting, managing, and storing data separately, with limited access between groups [6].

Microsoft Fabric's Lakehouse architecture addresses this problem by implementing consistent governance policies through unified metadata management and schema enforcement [7]. A single catalog manages permissions, lineage, and quality controls, maintaining regulatory compliance while reducing the risk of unauthorized access [7].

ACID transaction support ensures data consistency and prevents corruption even during concurrent operations [6]. The centralized approach eliminates governance gaps that typically appear between separate warehouse and lake environments [7].

Here, it's important to highlight an additional benefit: companies that migrate from traditional siloed architectures to a modern data lakehouse gain complete data visibility and reduce costs [6]. The lakehouse architecture eliminates the need to maintain separate systems for different workloads, reducing capital and operational expenses [7].

Building a Microsoft Fabric Lakehouse from Scratch

There comes a time when we need to get our hands dirty and create our first Lakehouse on Microsoft Fabric. Unlike traditional solutions that require complex configurations, the process here is much more straightforward than you might think.

Workspace and permissions configuration

The first step is to have a workspace enabled in Microsoft Fabric. After logging into the portal, go to the side menu and select "Workspaces," then "New Workspace." Fill in a name and description that makes sense for your company.

In advanced configurations, you can choose between Trial, Fabric capacity with SKU F64, or Power BI Premium capacity with SKU P1. This choice will depend on your specific scenario and organizational needs.

One nice thing here is that Fabric automatically inherits permissions and sensitivity labels for all related items. This means you don't have to manually configure access control for each component—a significant time saver for teams.

Lakehouse item creation and initial structure

With the workspace configured, creating Lakehouse follows a few simple steps:

- In the workspace, click “New Item” and choose “Lakehouse” in the “Store Data” section.

- Name your Lakehouse – avoid spaces and special characters like @, #, $, %

- Click “Create”

Microsoft Fabric automatically provisions three essential components: the Lakehouse itself for storage and processing, the SQL Analytics Endpoint for SQL queries, and the Semantic Model for reporting and visualizations.

The internal structure is organized into two main areas. The folder /Files stores raw data in various formats – CSV, Parquet, images – functioning as our Bronze layer of the medallion architecture. The folder /Tables stores refined data in Delta Lake format, ready for business analysis, which would be our Gold tier.

SQL Endpoints and Standard Semantic Model

There's a key differentiator in Microsoft Fabric that's worth highlighting: the automatic generation of a SQL Analytics endpoint when you create a Lakehouse. This endpoint provides a read-only relational interface over Delta tables, allowing you to explore and query data using T-SQL.

Until August 2025, each Lakehouse had a default semantic model automatically generated. However, this functionality is being discontinued to provide greater control and governance. Now, you need to explicitly create semantic models, which actually provides more transparency and accountability.

To query data via the SQL Analytics endpoint, simply select "SQL Analytics endpoint" from the Lakehouse drop-down menu. From there, you can run SQL queries or directly visualize table data. This endpoint also allows you to connect to external tools like Power BI Desktop, SQL Server Management Studio, or Azure Data Studio using the connection string available in the settings.

Implementing the Medallion Architecture: Bronze, Silver, and Gold

Image Source: Piethein Strengholt – Medium

There's an interesting way to organize data that makes perfect sense when you think about it. Medallion architecture works like a production line where data is refined step by step, going from a raw state to a form ready to generate valuable insights for the business.

The idea behind this approach is simple: instead of trying to process everything at once and creating a mess, we organize the work into three clearly defined tiers: Bronze for newly arrived data, Silver for data that has already undergone initial cleaning, and Gold for information ready for analysis and decision-making.

Raw Data Ingestion in the Bronze Tier

The Bronze tier is like a warehouse where everything arrives first. Here, data is stored exactly as it came from the original sources—sales systems, sensors, apps, social media—in short, everything mixed together and without much processing [8].

Microsoft Fabric offers two main ways to bring data into this layer: a one-time load for historical data and an incremental load for ongoing updates [9]. During this process, several components work together:

The Control Table decides which items will be moved to the lakehouse. The Ingestion Component copies the data from the original system. Auditing keeps an eye on everything, recording what happens and identifying errors. Notification alerts the team when something important happens. Configuration Management manages system configurations.

An important feature of Bronze data is that it is preserved in its original format. Even if it is later updated or deleted, Delta Lake keeps the previous versions, ensuring that we can always go back and see how the data looked in the past [10].

Transformations with PySpark Notebooks in the Silver Tier

This is where the real magic starts to happen. The data leaves Bronze and undergoes transformations that make it more organized and useful. We use PySpark notebooks that always follow a pattern: we load the necessary packages, import auxiliary notebooks, read the data, apply the transformations, and write everything to Delta tables [5].

The most common transformations include things like standardizing column names—because each system tends to name things differently—converting data types, adding auxiliary columns that make later analysis easier, and performing basic cleanup operations.

Notebooks can use the Delta API to perform merge operations, which means updating existing records while simultaneously inserting new data [5]. It's an efficient way to keep everything up to date without losing history.

At this stage, we also perform critical checks: we validate that the data schema is correct, handle null values, remove duplicates, resolve issues with data that arrived out of order, and perform quality checks [11]. All of this ensures that the data is structured and reliable for the next steps.

Data Modeling and Aggregations in the Gold Tier

The Gold tier is the final result of this entire process. Here, data is optimized for visualizations and reports, organized in a way that makes life easier for those who need to make decisions based on this information. We typically implement a dimensional model with fact and dimension tables, which is a classic and efficient way to organize data for complex analyses [12].

For companies that need real-time insights, Microsoft Fabric allows you to aggregate and calculate data as it arrives through materialized views. This provides quick access to the latest values, ensuring that decisions are based on up-to-date information [8].

When we implement this comprehensive architecture on Microsoft Fabric, companies gain a much clearer view of all their data. Information silos disappear, and operational costs decrease. It's a way of structuring data that truly works to extract valuable insights efficiently and reliably.

Power BI Integration Using Direct Lake Mode

Image Source: Medium

We've reached the point where the data we've so carefully organized finally comes to life through visualizations. The integration between Lakehouse and Power BI in Microsoft Fabric works in a way that's truly impressive in its simplicity.

Connecting Power BI to the Lakehouse Semantic Model

The connection is straightforward. In your Lakehouse, you'll find the "SQL analytics endpoint" option in the drop-down menu. This displays all the tables we previously created in the Bronze, Silver, and Gold tiers. To transform this data into a robust analytical model, we select the "Model" tab, where we can access Power BI's default semantic model.

The next step involves defining relationships between tables. You drag fields like "CityKey" from a fact table and drop them onto the corresponding field in the dimension table. In the relationship settings, we establish a "Many-to-One" (*:1) cardinality with a single filter direction, ensuring that referential integrity works correctly. This process transforms siloed data into a cohesive dimensional model, ready for deeper analysis.

Advantages of Direct Lake over Import and DirectQuery

There's something truly compelling about Direct Lake mode. It combines the best of traditional data access methods in a way that solves long-standing problems. DirectQuery queries the source directly for each execution, which ultimately impacts performance. Import mode requires full data refresh periodically, consuming time and resources.

Direct Lake loads files directly into memory without duplication. This eliminates the need for explicit imports, allowing you to capture changes to the source as they occur. Your reports stay current without the operational burden of scheduled updates.

For companies that handle large volumes of data or require frequent updates, Direct Lake offers a solution that truly makes a difference. You get performance similar to Import mode with the latest DirectQuery.

Creating reports with near-real-time data

Once you've configured your model, you can start creating reports by selecting "New report" in Power BI. On the canvas, you can drag fields from tables to create dynamic visualizations that respond in near-real time to changes in the underlying data.

The real advantage for your company lies in the speed and flexibility of this process. Queries in Direct Lake mode are processed by the VertiPaq engine, ensuring significantly higher performance than traditional DirectQuery. Update operations for Direct Lake models involve only metadata and are completed in seconds, while updates in Import mode can consume hours and considerable resources.

There's a mechanism called "framing" that analyzes metadata from Delta tables and updates references to the most recent files. This ensures that reports reflect the current state of the business without operational overhead. This means the team spends less time managing data and more time extracting valuable insights for strategic decisions.

Best Practices and Optimizations for Scalability

There comes a time when your company's data starts to grow and you notice queries are slowing down. It's a situation everyone who works with data eventually faces: what worked well with a small amount of data starts to slow down when the volume increases.

The point is that enterprise data has this tendency to grow exponentially, and if we don't take care of optimizations from the start, we end up facing performance issues that affect the entire analytics team.

Using V-Order for Optimized Reading

There's a technology called V-Order that can make a significant difference in your query speed. It reorganizes data in Parquet files more intelligently, applying special sorting and compression that results in up to 50% faster reads [13].

The interesting thing is that even adding about 15% in recording time saves you up to 50% in storage [13]. It's like making a small investment to get a much greater return on analysis performance.

To enable, you can configure spark.sql.parquet.vorder.default to true, or else execute OPTIMIZE VORDER on existing tables. This optimization works especially well when you have heavy analytical workloads and reports that need to run quickly.

Maintenance with OPTIMIZE and VACUUM commands

One thing I've learned from working with data is that it needs regular cleaning, just like a house. Over time, you end up with lots of small files scattered around, and this hurts query performance [14].

The OPTIMIZE command merges these small files into larger files, ideally around 1GB each. VACUUM removes those old files that are no longer useful, freeing up storage space [14].

You can perform this maintenance directly in the interface—just right-click on the table and choose "Maintenance"—or use notebooks if you prefer to automate it. There's just one important point: VACUUM affects the ability to go back in time, so maintain at least seven days of data retention [14].

Separation of layers by business domain

Another practice that makes a big difference is organizing data according to the company's business domains. It's like separating files by department—sales, marketing, finance—each in its own place [15].

This not only makes management easier but also significantly improves query performance, as the system can eliminate partitions that aren't relevant for that specific analysis. Furthermore, it's much easier to control who has access to what, with each department managing its own data.

Ultimately, these optimization practices mean your team spends less time waiting for queries to run and more time extracting valuable insights from the data.

Conclusion

We've reached the end of our Microsoft Fabric journey and seen how this platform can change the way we manage data in companies. The issue of information silos, which has always plagued managers, finds a practical solution here, where everything works seamlessly.

The Lakehouse architecture truly combines the benefits of data lakes with the power of data warehouses. This isn't just theory—in practice, it works and solves real problems we face every day with data scattered across different systems.

The implementation of Bronze, Silver, and Gold tiers provides a meaningful organization. Data is gradually refined, undergoing verification and cleaning until it reaches a point where we can trust it to make important decisions. This structure significantly improves governance and improves the quality of information that reaches the right people.

Power BI's Direct Lake mode caught my attention for what it offers: near-real-time analytics without the usual performance issues. Teams can make faster decisions because the data is always up-to-date.

Optimizations like V-Order and the OPTIMIZE and VACUUM commands make a real difference when implemented correctly. Queries are faster, storage costs decrease, and technical staff can focus on what really matters instead of putting out fires.

Today, we have a growing demand for real-time data analysis and the need to unify information from disparate sources. Microsoft Fabric can meet these needs in a way that simplifies infrastructure while expanding analytics capabilities.

If you'd like to learn more about how to implement this in your company, please contact us. Our team has experience tailoring this solution to specific needs and ensuring the implementation works as intended.

Key Takeaways

Discover how Microsoft Fabric can transform your enterprise's data management through a scalable and integrated Lakehouse architecture:

• Microsoft Fabric unifies the entire data stack on a SaaS platform, eliminating silos and integrating everything from engineering to business intelligence in a single environment.

• Medallion Architecture (Bronze-Silver-Gold) Progressively structures data from raw ingestion to refined insights, ensuring quality and governance at every layer.

• Direct Lake mode revolutionizes analytics combining Import performance with DirectQuery's timeliness, offering near-real-time reporting without operational overhead.

• V-Order Optimizations and OPTIMIZE/VACUUM Commands accelerate queries by up to 50% and reduce storage costs, maintaining sustainable performance on an enterprise scale.

• Native integration with Power BI allows you to create sophisticated visualizations directly from Lakehouse data, transforming information into agile strategic decisions.

Properly implementing Microsoft Fabric not only simplifies data infrastructure but also democratizes access to information, allowing your team to focus on creating value instead of managing technical complexities. This unified approach represents the future of enterprise data analytics.

References

[1] – https://learn.microsoft.com/en-us/fabric/fundamentals/microsoft-fabric-overview

[2] – https://saxon.ai/blogs/tap-into-the-power-of-unified-data-with-microsoft-fabric-top-9-benefits-and-use-cases/

[3] – https://www.ibm.com/think/topics/data-warehouse-vs-data-lake-vs-data-lakehouse

[4] – https://www.montecarlodata.com/blog-data-warehouse-vs-data-lake-vs-data-lakehouse-definitions-similarities-and-differences/

[5] – https://www.datacamp.com/blog/what-is-microsoft-fabric

[6] – https://www.databricks.com/blog/data-silos-explained-problems-they-cause-and-solutions

[7] – https://www.prophecy.io/blog/lakehouse-architecture-guide

[8] – https://learn.microsoft.com/en-us/fabric/real-time-intelligence/architecture-medallion

[9] – https://support.fabric.microsoft.com/pt-br/blog/demystifying-data-ingestion-in-fabric-fundamental-components-for-ingesting-data-into-a-fabric-lakehouse-using-fabric-data-pipelines?ft=Data-factory:category

[10] – https://techcommunity.microsoft.com/blog/modernizationbestpracticesblog/building-bronze-layer-of-medallion-architecture-in-fabric-lakehouse-using-wal2js/4208928

[11] – https://endjin.com/what-we-think/talks/microsoft-fabric-processing-bronze-to-silver-using-fabric-notebooks

[12] – https://docs.databricks.com/aws/en/lakehouse/medallion

[13] – https://hectorv.com/2023/10/01/different-approaches-to-ingest-and-transform-data-in-a-medallion-architecture-using-microsoft-fabric/

[14] – https://learn.microsoft.com/en-us/fabric/data-engineering/delta-optimization-and-v-order

[15] – https://learn.microsoft.com/en-us/fabric/data-engineering/lakehouse-table-maintenance

[16] – https://learn.microsoft.com/en-us/fabric/onelake/onelake-medallion-lakehouse-architecture